Welcome back to The AGI Observer. In Part 3 we build on our Introduction (0/5, Part 1 on Scaling Deep Learning and Part 2 on Neuro-Symbolic Systems to examine Cognitive Architectures: what they are in plain language, why they matter now, who is building them, where the bottlenecks are, and how we will know they are working. As before, we end with a sample educational virtual portfolio to show how this pathway maps to real companies and infrastructure. Educational only; not financial advice.

Executive summary

Thesis. Cognitive architectures aim to assemble an artificial mind: cooperating modules for perception, working memory, long-term memory, planning, action/tool-use, and self-monitoring that actually work together.

Why it matters. More transparent and controllable by design: users can see steps, sources, and decisions. This is a plausible route to “human-like” generality because skills can transfer across tasks.

Near term. Project agents that remember across days, plan first, execute with tools, and provide timeline-style traces of what happened.

Risk. System complexity, brittle interfaces between modules, latency from multi-step plans, and slow iteration due to weak end-to-end benchmarks.

What to watch. Agent frameworks with persistent memory, global-workspace-style attention (a shared board where ideas compete), and reliable cross-task transfer.

1) What “cognitive architecture” means in practice

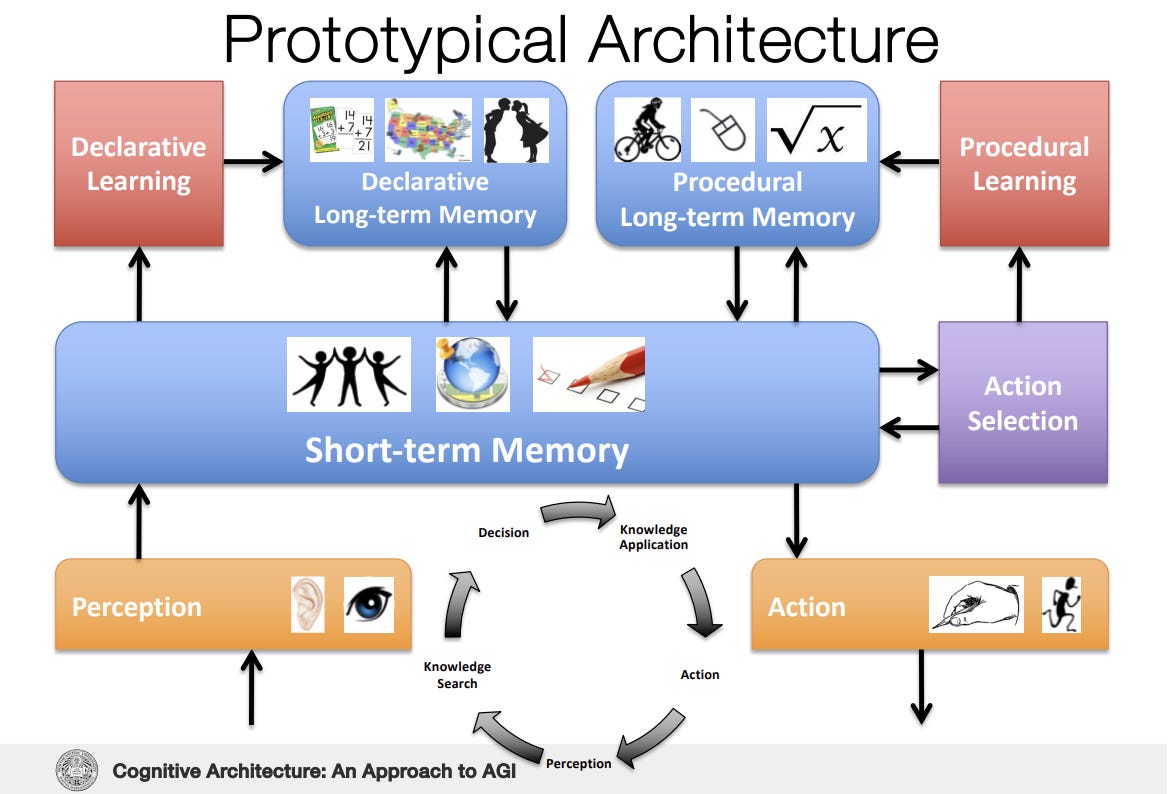

Instead of one giant model guessing everything, roles are separated and coordinated:

Perception understands inputs (text, images, audio, video).

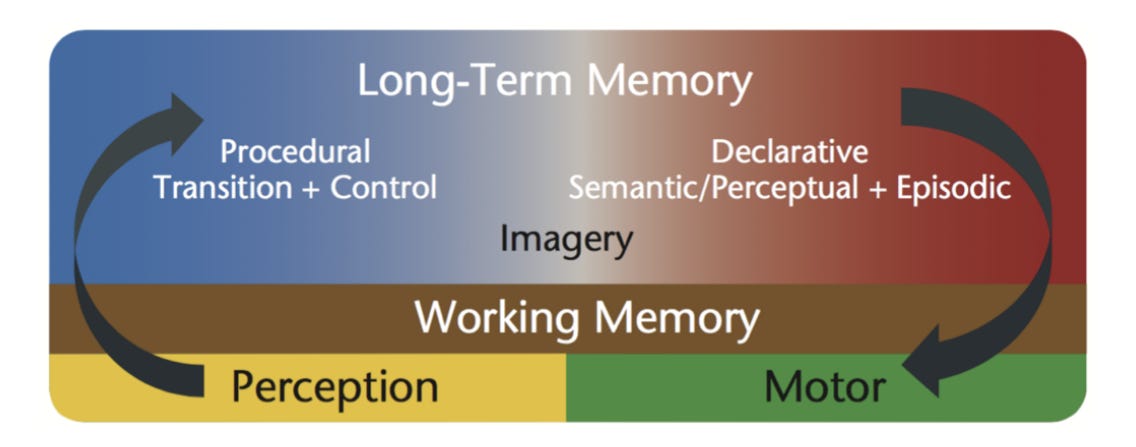

Working memory keeps the active details on hand.

Long-term memory stores and retrieves facts and past episodes.

Planning breaks goals into steps and chooses tools.

Action & tools run searches, code, spreadsheets, databases, or APIs.

Self-monitoring checks progress, fixes errors, and asks for help when needed.

2) Why this pathway matters

Real projects last longer than a chat window. Cognitive architectures keep momentum across days and make outcomes auditable. They also support skill transfer: once a system learns “how to reconcile a spreadsheet” or “how to draft a risk summary,” that procedure can be reused in new contexts without starting from zero.

3) How it works under the hood

Structured memory, not guesswork. Items are saved with keys, who/what/when/why, so retrieval is deliberate.

Plans before prose. The agent writes a short plan, then executes it; plans are cheap to update when reality disagrees.

Checks at each step. Rules and validators verify numbers, formats, and policies.

Global workspace. A central board where candidate ideas or memories are surfaced and the system selects what to focus on.

4) Where we are today

Agent frameworks are maturing: plan-and-execute loops, tool-calling, and memory stores are becoming standard. Long context helps, but structured memory remains more reliable and cost-effective. Early wins appear in policy and legal drafting with citations, finance and operations tasks that repeat monthly, “living” research files that update as data arrives, customer cases that span days, and software maintenance where planning and history reduce rework.

5) Who is building it

SOAR (John Laird, Paul Rosenbloom)

ACT-R (John R. Anderson)

Global Workspace / LIDA traditions (Bernard Baars; Stanislas Dehaene on neural evidence)

OpenCog / Hyperon (Ben Goertzel)

Numenta (Jeff Hawkins) on cortex-inspired approaches

Academic groups at CMU, JHU, RPI, and others

6) Sample educational virtual portfolio - Cognitive Architecture stack

Education only; not financial advice. Equal weight within buckets.

Agent & workflow platforms (30%)

Microsoft (MSFT); Alphabet/Google (GOOGL); ServiceNow (NOW); UiPath (PATH)Memory, search & data (20%)

Snowflake (SNOW); Elastic (ESTC); MongoDB (MDB)Integration & observability (15%)

Datadog (DDOG); Confluent (CFLT)Compute & acceleration (15%)

NVIDIA (NVDA); AMD (AMD)Collaboration & content backbone (10%)

Atlassian (TEAM); Box (BOX)Industrial & edge execution (10%)

Rockwell Automation (ROK); ABB (ABB)

Closing thought

Part 1 showed how Scaling Deep Learning keeps expanding raw capability and lowering costs. Cognitive architectures organize that capability into goal-directed systems that remember, plan, adapt, and explain themselves, a necessary step if we want dependable intelligence on real work that stretches beyond a single prompt.

Prepared by AI Monaco

AI Monaco is a leading-edge research firm that specializes in utilizing AI-powered analytics and data-driven insights to provide clients with exceptional market intelligence. We focus on offering deep dives into key sectors like AI technologies, data analytics and innovative tech industries.

Really sharp breakdown of how cognitive architectures could actualy solve the brittleness problem. The global workspace idea where competing ideas surface to a central board feels way more robust than just throwing context at a model and hoping. I've been trying to build simple workflow automation at work, and the number of times the system just loses track of what its doing is wild - having that deliberate memory structure you described would fix like 90% of those failures.

Thank you, I appreciate it!