Welcome to our new mini-series on Artificial General Intelligence. This mini-series maps the five most probable pathways to get there, what each really is, who’s driving it, where the bottlenecks are and how we’ll know it’s working. To keep this grounded, each part ends with a sample educational virtual portfolio. Why include it? Because we want to show how frontier research translates into reality; which companies, infrastructure layers, or tooling stacks are actually positioned if that pathway advances. We want to serve as bridge from laboratories to reality, a concrete angle on an otherwise abstract progress. As always, educational only; not financial advice.

1. Introduction

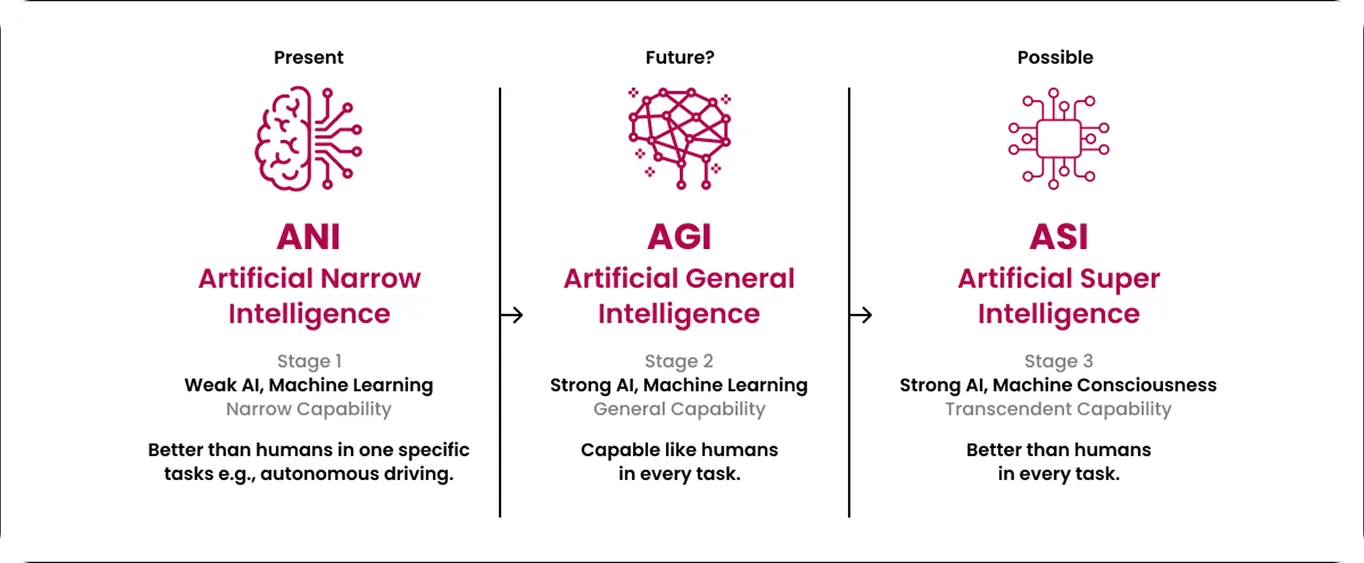

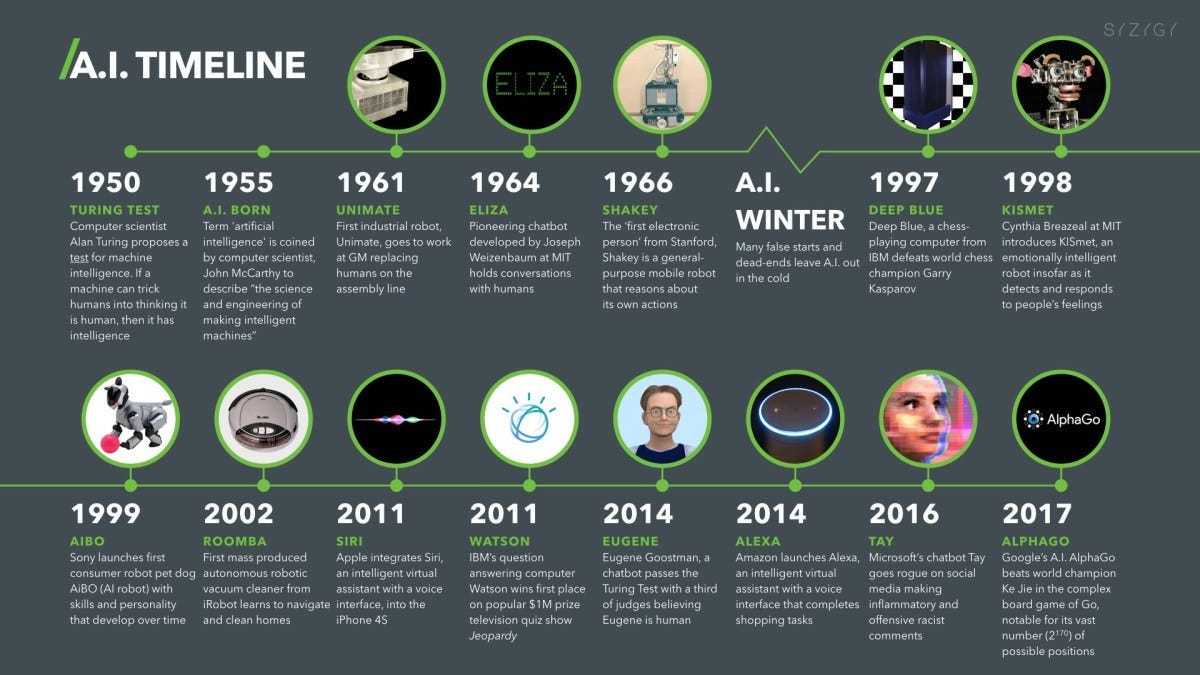

AI has moved from impressive demos to everyday infrastructure. Meanwhile, credible timelines for human-level AI span ~5–15 years, with rising evidence that raw scale will need reinforcement from reasoning, memory, embodiment, or open-ended learning. We’re approaching a fork in the road: either scaling alone keeps compounding or we converge on hybrid systems that integrate learning with logic, planning, and active exploration.

This series breaks down the five most plausible pathways to AGI. Each piece will explain the core idea in plain language, show where research really stands, estimate timelines with explicit uncertainty, and highlight the signals that matter for strategy and capital allocation.

For paid subscribers: At the end of every part, you’ll find a sample, educational “virtual portfolio” of publicly listed companies mapped to that pathway (infrastructure, models, tooling, data, applications). Education only; not financial advice.

Five potential pathways that could lead us to AGI

Each new publication will focus on a different pathway, explaining in details the road ahead, the reasons why it matters and what is the current state of art. Below we provide a general overview of what is upcoming in future publications:

Part 1: Scaling Deep Learning

Thesis: Bigger, better-trained, better-tooled foundation models could cross the AGI threshold.

Why it matters: Fastest compounding; huge demand for compute, data, power, memory; immediate productivity impacts.

What to watch: Unprecedented-scale training runs, tool-use & test-time learning, collapsing $/token, tough reasoning benchmarks.

Who’s backing it: OpenAI (Altman, Brockman), Google DeepMind (Hassabis, Vinyals, Silver), Anthropic (Dario & Daniela Amodei), xAI (Musk, Babuschkin), Microsoft, Google, NVIDIA; Meta (LeCun) pushes large models + world-modeling.

Obstacles: Diminishing returns/data exhaustion; compute–power–memory bottlenecks; abstraction/logic ceilings; opacity & alignment; capex concentration risk.

Essential papers (starter pack): Attention Is All You Need; Scaling Laws for Neural Language Models; Chinchilla (compute-optimal training); Chain-of-Thought Prompting; Deep RL from Human Preferences (RLHF).

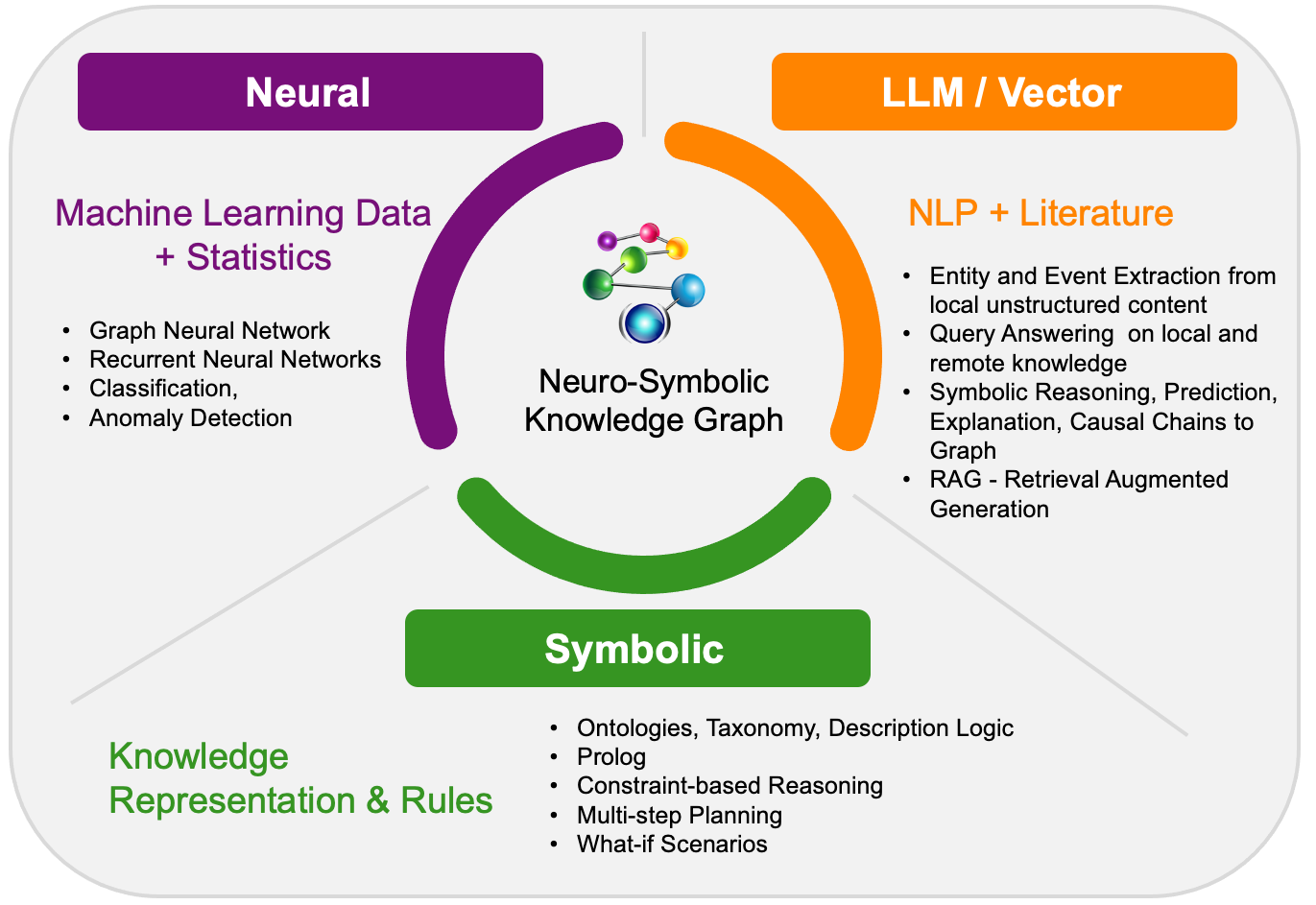

Part 2: Neuro-Symbolic Systems

Thesis: Combine neural learning with explicit logic/knowledge so models can explain, check, and plan.

Why it matters: Tackles hallucinations and brittle reasoning; attractive for regulated domains.

What to watch: Built-in reasoning modules, knowledge-graph integrations, measurable drops in logical/factual error rates.

Who’s backing it: IBM Research/MIT-IBM, DARPA XAI/3rd-wave programs; Stanford, MIT CSAIL, Oxford, Edinburgh; advocates like Gary Marcus; researchers Josh Tenenbaum, Yejin Choi, Stuart Russell, Pedro Domingos, Luc De Raedt.

Obstacles: Differentiability gap between discrete logic & continuous nets; knowledge engineering & freshness; latency/overhead; hard-to-prove general gains; scarce hybrid tooling/talent.

Essential papers (starter pack): Neuro-Symbolic Concept Learner; Differentiable ILP; Logic Tensor Networks; survey on neural-symbolic learning; Probabilistic Soft Logic.

Part 3: Cognitive Architectures

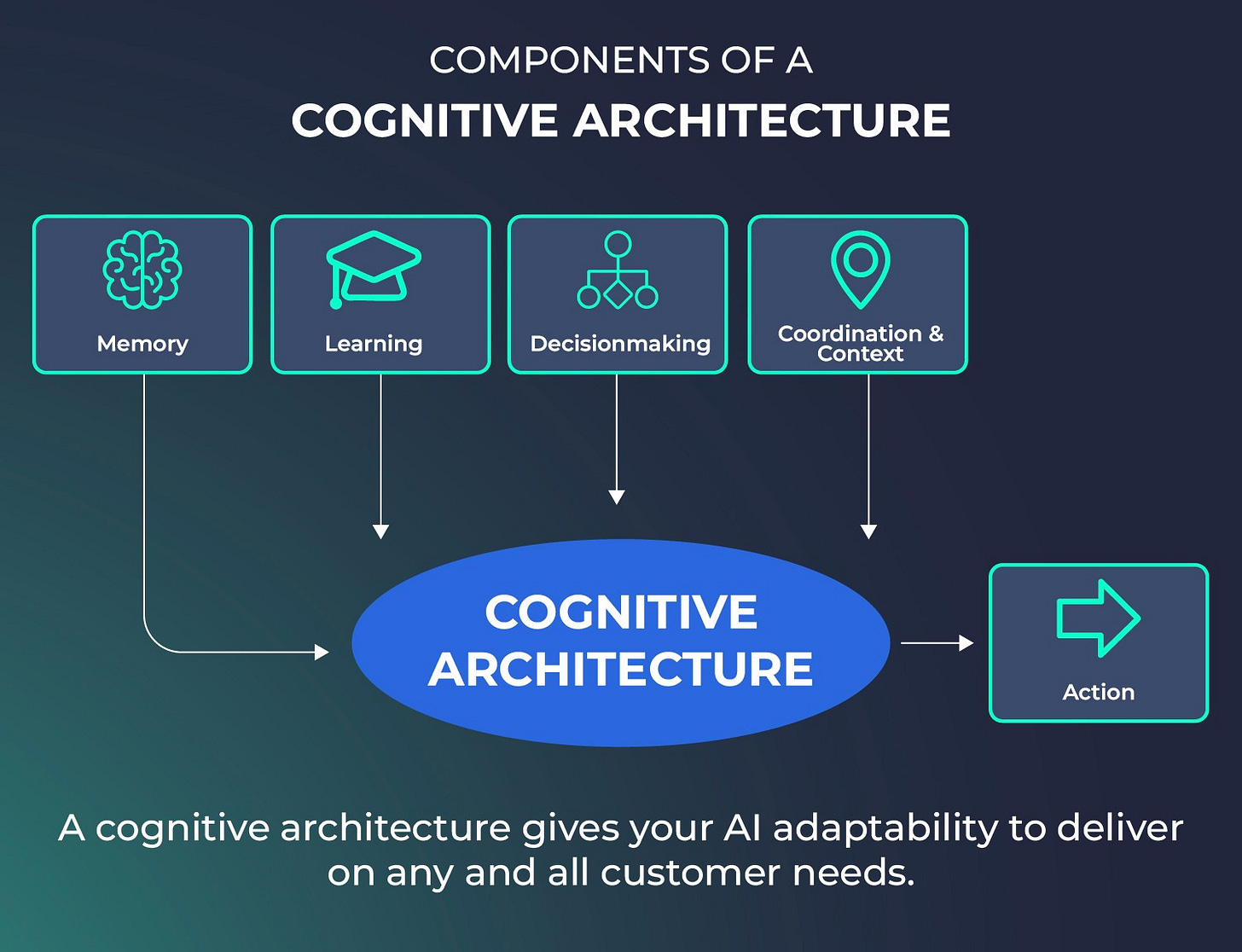

Thesis: Build an artificial mind: modules for memory, planning, perception, and self-reflection that actually work together.

Why it matters: More transparent and controllable by design; a path to “human-like” generality.

What to watch: Agent frameworks with persistent memory, global-workspace-style attention, reliable cross-task transfer.

Who’s backing it: SOAR (Laird, Rosenbloom), ACT-R (John R. Anderson), LIDA/Global Workspace (Baars; Dehaene for neuroscience), OpenCog/Hyperon (Ben Goertzel); Numenta (Jeff Hawkins); academic cognitive-AI groups (CMU, JHU, RPI).

Obstacles: System complexity & brittle interfaces; learning “glue” across modules; throughput/latency; slow iteration; weak end-to-end benchmarks.

Essential papers (starter pack): The Soar Cognitive Architecture; ACT-R: An Integrated Theory of the Mind; Global Neuronal Workspace reviews; LIDA computational model.

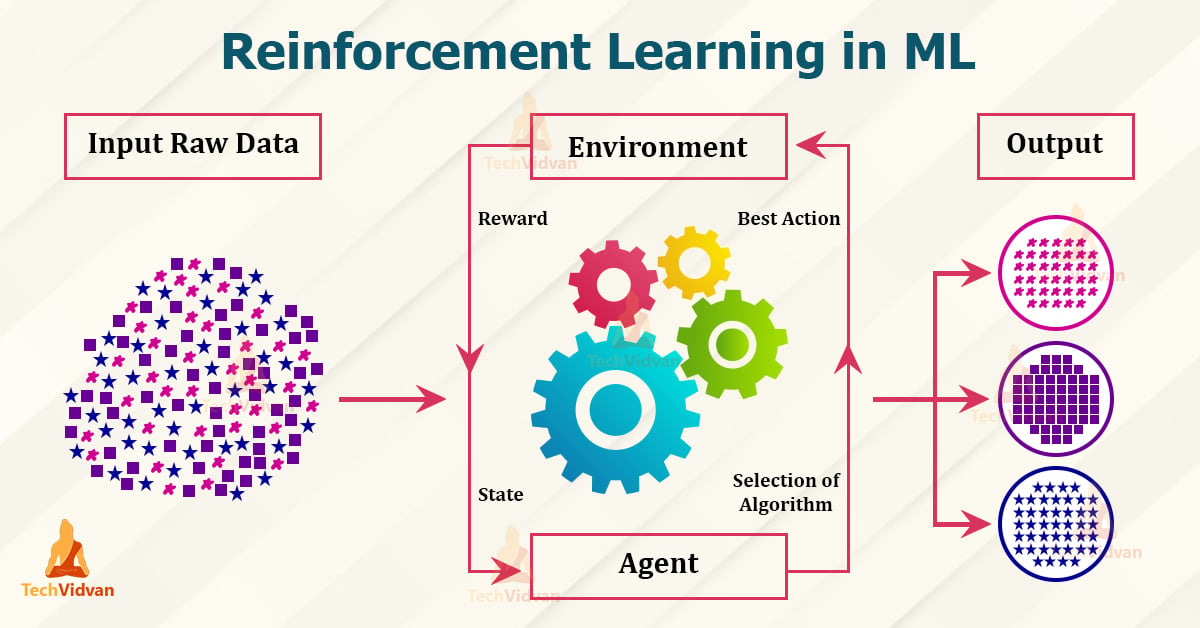

Part 4: Evolution + Reinforcement Learning

Thesis: Let intelligence emerge by training and evolving agents in rich, open-ended worlds.

Why it matters: Could discover novel architectures and grounded common sense; heavy on simulation and hardware.

What to watch: Large-scale multi-agent environments, ever-harder curricula, policies that generalize outside the sandbox.

Who’s backing it: Google DeepMind (AlphaGo/AlphaZero/MuZero; multi-agent RL), OpenAI (self-play, RLHF), Uber AI (POET), Sony AI; researchers Rich Sutton, David Silver, Sergey Levine, Pieter Abbeel, Jeff Clune, Kenneth Stanley & Joel Lehman, Chelsea Finn.

Obstacles: Sample inefficiency & compute hunger; reward misspecification/exploits; maintaining open-endedness; sim-to-real transfer; oversight for multi-agent dynamics.

Essential papers (starter pack): AlphaGo/AlphaZero/MuZero; OpenAI Emergent Tool Use (“hide-and-seek”); Novelty Search/Abandoning Objectives; POET/Enhanced POET.

Part 5: Integrated Hybrids (Convergence)

Thesis: The first true AGI may be an assembly, not a monolith: deep models for perception/language, symbolic logic for consistency, RL for adaptation, all orchestrated by an architectural “brain.”

Why it matters: Most realistic route to robust, trustworthy systems; enables layered safety checks.

What to watch: Tool-using agents with verifiable reasoning, cross-module training, end-to-end open-world evaluations.

Who’s backing it: Google DeepMind (learning + planning; Gemini Agents), OpenAI (agents, tool-use/code execution), Microsoft (AutoGen/agent ecosystems), Anthropic (constitutional checks), Meta (world-models/agents), academic consortia building agent frameworks.

Obstacles: Orchestration complexity; emergent interaction failures; end-to-end verification; latency/cost from multi-module stacks; supply-chain & governance of composite systems.

Essential papers (starter pack): ReAct (reason+act loop); Toolformer (self-taught tool use); Gato (generalist agent); PaLM-E (embodied LLM); Constitutional AI; Voyager (continual tool-using agent).

What you’ll get out of this mini-series

A clear roadmap for the future of AI

Briefings on the five pathways, milestone checklists and leading indicators, so you know what to expect next and how to tell signal from noise.How to read reality better

Practical lenses to interpret headlines, inventions and products like smart glasses, turning scattered news into a coherent picture.How to fund the future

For each pathway, we outline the stack it pulls (compute, power, memory, models, tooling, data, apps) and, for paid subscribers, include a sample educational virtual portfolio to show how research maps to concrete companies and infrastructure.

Prepared by AI Monaco

AI Monaco is a leading-edge research firm that specializes in utilizing AI-powered analytics and data-driven insights to provide clients with exceptional market intelligence. We focus on offering deep dives into key sectors like AI technologies, data analytics and innovative tech industries.

Loved this!