Introduction

Welcome back to Laboratory. This week we unpack Epoch AI’s “What you need to know about AI data centers” by Anson Ho, Ben Cottier, and Yafah Edelman (Nov 4, 2025). The piece reframes frontier AI as power-first infrastructure: gigawatt campuses with high-density compute and liquid cooling, anchored by firm power strategies, pragmatic grid integration, and the security realities of massive physical footprints.

In this briefing, we chart:

Power Shapes Strategy: how energy availability, regulation, and siting drive the map more than user proximity.

Density Dictates Design: how concentrated compute pushes thermal choices, water planning, and capital allocation.

Centralize vs Distribute: how to balance single-site simplicity with multi-site flexibility for resilience and growth.

Executive summary

AI data centers are shifting from “big server rooms” to national-scale infrastructure. The core constraint is power. Location choices follow power first, everything else second. What makes AI campuses special is not only total megawatts, but exceptional power density per rack that forces a move to liquid cooling and new water and permitting regimes. Climate impacts remain modest in aggregate today, yet locally material and likely to grow. For the next two years, centralized training remains feasible at single sites, although decentralized strategies will stay on the table for grid and risk reasons. Security is hard at gigawatt scale because cooling and substation footprints are visible from space.

1) The scale: from buildings to megaprojects

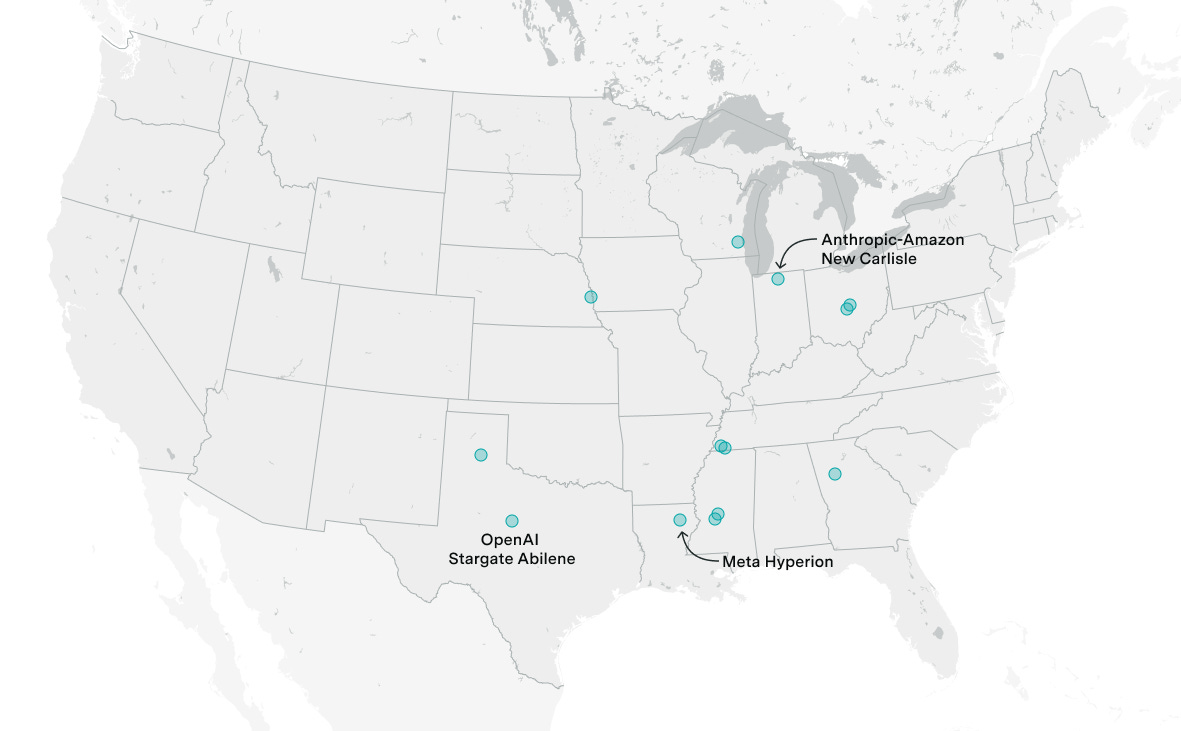

OpenAI’s Stargate Abilene anchors the order of magnitude:

Power: roughly the electricity to serve Seattle.

Compute: more than 250× the supercomputer used to train GPT-4.

Land: larger than 450 soccer fields.

Capex: about 32 billion dollars in construction and IT.

Workforce: a few thousand on-site during buildout.

Timeline: around two years for initial construction.

And Stargate is only one node. By the end of 2027, cumulative AI data center investment could reach hundreds of billions, rivaling historical programs like Apollo and the Manhattan Project in economic scale.

2) Power: the hard constraint that picks the map

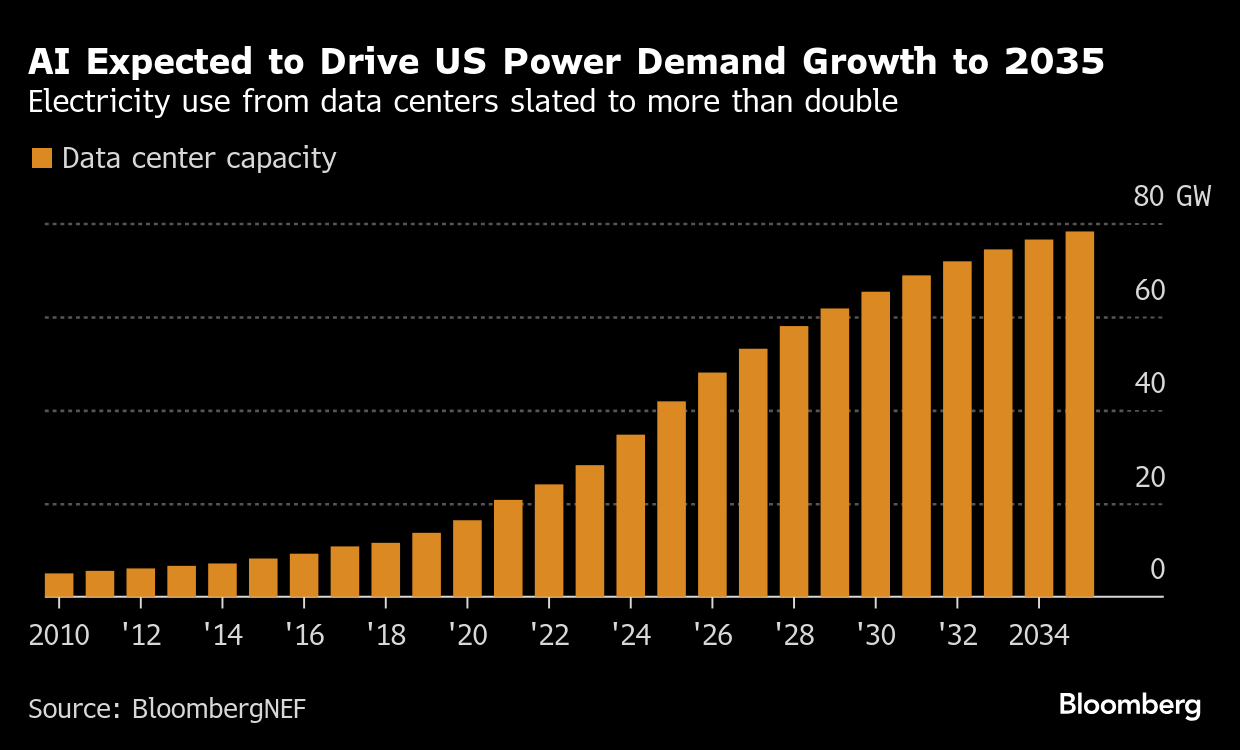

In the United States, AI data centers are on track to need 20 to 30 GW by late 2027.

That is about 5% of average US generation capacity.

By comparison, 30 GW equals roughly 25% of Japan’s capacity, 50% of France’s, and 90% of the UK’s.

Result: the near-term buildout clusters in power-abundant regions and power-abundant countries.

Inside a country: siting gravitates to states with easier interconnects and firm fuel, for example Texas and parts of the Midwest and South, where natural gas and streamlined permitting shorten the path from substation to server hall.

Latency myth: physical distance to end users matters less than most expect. Model inference time and training time dominate round-trip network latency. For LLMs, shaving a few milliseconds off fiber routes rarely beats adding more compute.

3) Where the electrons come from

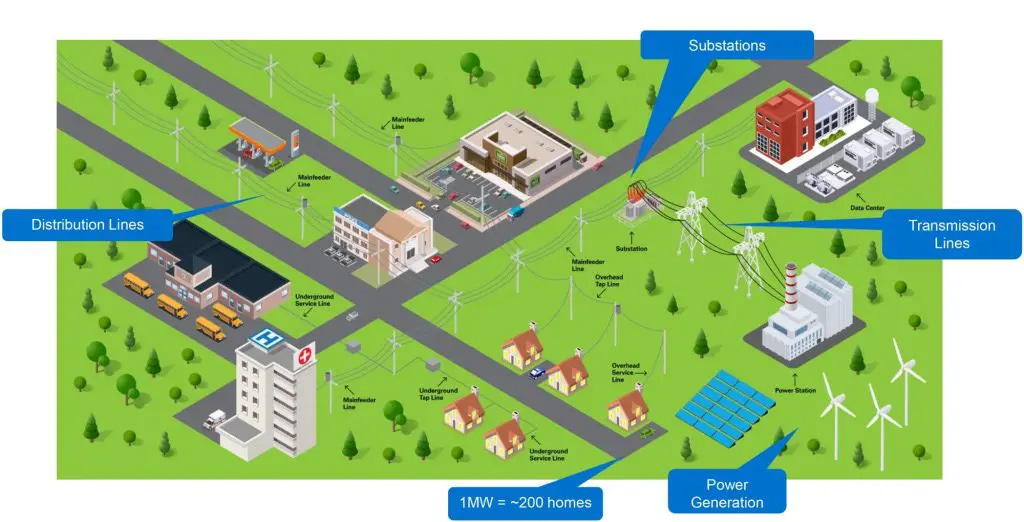

Primary supply: a mix of grid interconnection and on-site generation. Many campuses do both. Example paths include:

On-site natural gas turbines for early power and stability.

Progressive grid tie-in to scale capacity and integrate wind or solar.

Backup: diesel generators remain standard for emergencies, yet their run time is limited by air-permit thresholds, which keeps diesel as a short-duration safety net rather than a primary supply.

Renewables and “matching”: expect more PPA contracts, renewable matching, and storage pilots. Important nuance: some announced “green” additions match emissions rather than physically power the racks on a 24x7 basis. The engineering reality is that near-term firm power still leans on gas while grids catch up.

4) What makes AI data centers different: density

The signature difference is power density:

An NVIDIA NVL72 rack consolidates 72 GPUs in roughly a 0.5 m² footprint and a bit over 2 m tall.

Each such rack can draw 100 kW or more.

Traditional enterprise racks often sit near 10 kW.

Do the math across thousands of racks and you get gigawatt campuses.

High density is not optional. Training requires GPUs and CPUs to communicate at extreme bandwidth with minimal latency, which drives tight physical packing and specialized networking fabrics.

5) Cooling: liquid by default

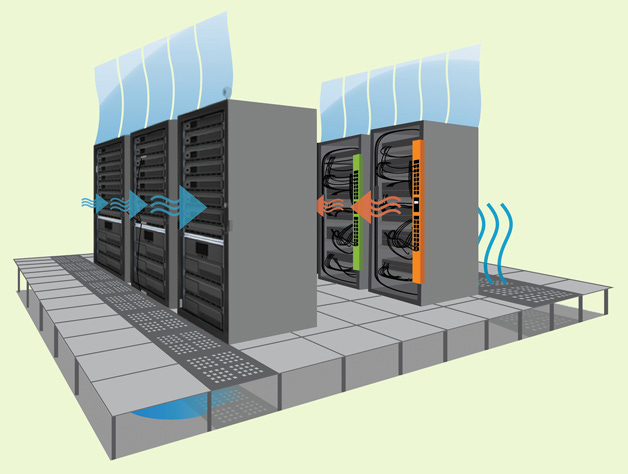

Air alone cannot carry away that much heat. Modern AI halls standardize on direct-to-chip liquid cooling:

Cold plates mounted on GPUs and CPUs move heat into a coolant loop inside the rack.

Heat transfers from coolant to facility water loops.

Heat is expelled outdoors by either:

Water-cooled chillers plus cooling towers with evaporation, or

Air-cooled chillers that avoid evaporative loss and reuse water, trading higher electrical load for lower water draw.

Because power density is extreme, much of the cooling plant lives outdoors and is unmistakable in satellite imagery.

6) Energy and water: today’s footprint vs tomorrow’s

Today in the US:

Data centers collectively draw on the order of 1% of electricity, far below air conditioning at about 12% and lighting at about 8%.

Direct water use by US data centers in 2023 was roughly 17.4 billion gallons, compared to 36.5 trillion gallons for agriculture.

However:

Local effects can be significant where power and water are constrained.

If the sector reaches 5% of US generation by 2027 and continues to expand, the climate and water debates will intensify.

Expect growing attention to 24x7 carbon-free energy, grid flexibility programs that curtail 1 to 5 percent of load during peaks, waste-heat reuse, and non-evaporative cooling.

7) Centralized vs decentralized training: the next two years

On current trends:

The largest single training run by 2027 could need around 2.5 million H100-equivalent GPUs.

Announced campuses such as Microsoft Fairwater suggest that single-site capacity on that order is feasible.

Why stay centralized:

Simpler orchestration and fewer failure modes.

Potentially lower capex and permitting friction when concentrated.

Why decentralize:

Opportunistic access to excess local power.

Risk management across weather and grid events.

Best guess: centralized training remains the default through 2027, with selective decentralization as a power strategy rather than a necessity.

8) Security and visibility at gigawatt scale

Secrecy is hard when:

Construction involves thousands of workers and dozens of contractors.

Cooling yards, switchyards, and transmission spurs advertise themselves in aerial and satellite imagery.

The result: a project that resembles the Manhattan Project in cost, yet cannot replicate its opacity.

Implication: security emphasis shifts from location secrecy to operational hardening, supply chain assurance, access controls, and cyber-physical resilience.

Conclusion

The AI buildout is a power story framed by density and cooling. Countries and regions that can marshal tens of gigawatts of reliable power, issue permits quickly, and source specialized equipment will set the pace of capability growth. Aggregate climate and water impacts are limited today, yet rising trajectories make local planning and credible mitigation essential. For at least the next two years, expect the frontier to be written by centralized mega-sites, with decentralized training used tactically where power pockets exist.

Bottom line: track power first, then cooling and water, then supply chains and permits. That hierarchy explains where the next breakthroughs will actually run.

For the full details: What you need to know about AI data centers

Nation-State scale is right! This is a great post. We are seeing build commitments the like we’ve never seen! Loved you insight here thanks.

🤯